Introduction: Ancient Wisdom for Modern Leadership

In an era defined by volatility, ambiguity, and profound ethical complexity, modern leaders are often left searching for a stable foundation upon which to build resilient teams and sustainable organizations. The conventional playbooks, focused solely on short-term outcomes and external metrics, frequently fall short. It is in this environment that turning to timeless wisdom is not a retreat from the contemporary world, but a strategic move toward a more powerful and sustainable form of engagement.

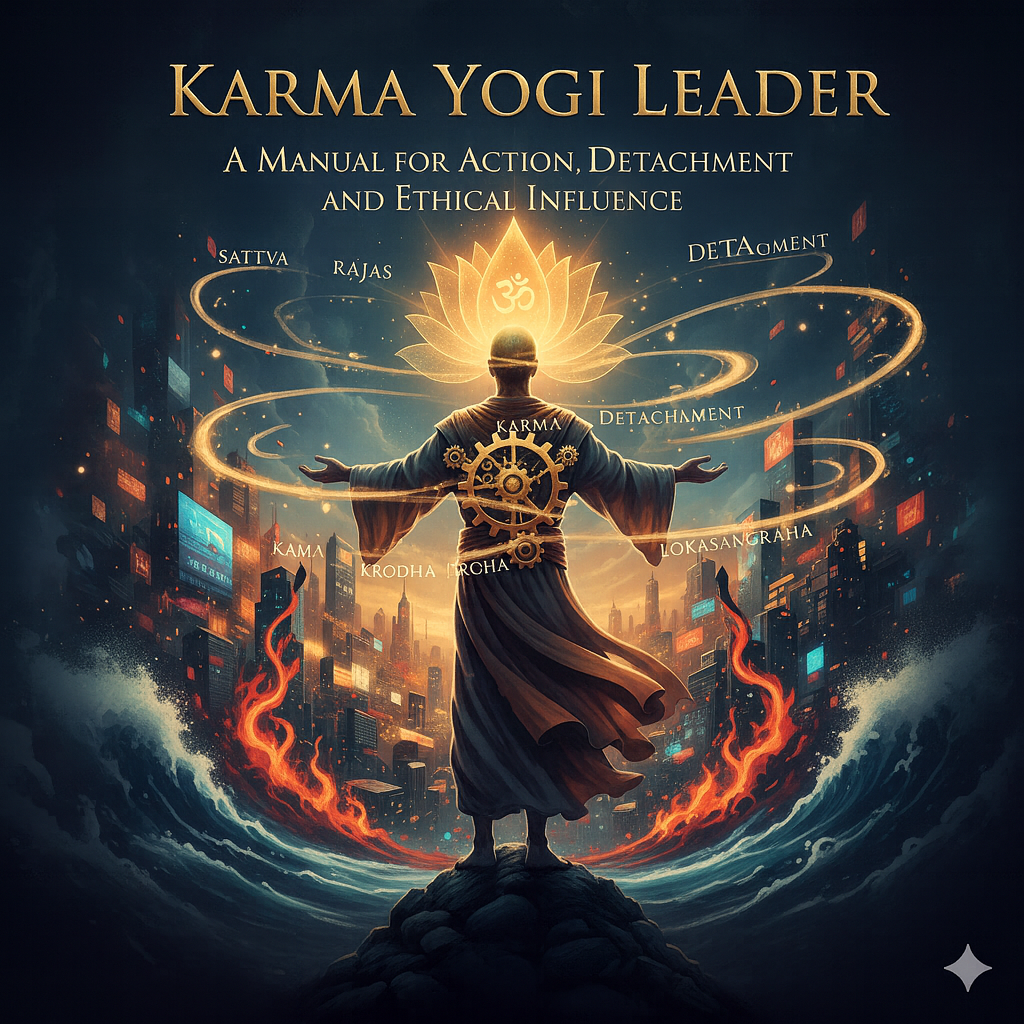

This manual is built on the premise that the ancient philosophy of Karma Yoga, a core tenet of the Bhagavad Gita, offers a remarkably relevant framework for today’s leadership challenges. Karma Yoga is not about renouncing professional life or retreating from ambition. Rather, it is a sophisticated system for engaging with the world more effectively. It provides a path to purposeful action, mental equanimity, and profound influence, grounded in a deeper understanding of our role and responsibilities.

The objective of this manual is to translate these profound principles into a practical and actionable guide. It is designed for leaders who seek not just to succeed, but to lead with integrity, to build resilience in themselves and their teams, and to elevate their impact from mere management to true stewardship.

——————————————————————————–

1. The Foundational Principle: The Inevitability of Action

For any individual in a position of leadership, action is the fundamental state of existence. It is a continuous and unavoidable reality. Every decision made, every email sent, every conversation held—and even the conscious choice to refrain from these—constitutes an action with tangible consequences. The notion of true “inaction” is an illusion.

The core teaching of Karma Yoga begins with this powerful realization. The mind and body are in a state of perpetual activity; there is no moment of genuine inactivity. Even a thought is a form of action, setting in motion a cascade of internal and external events. This understanding is the bedrock of conscious leadership.

“To think is to act. In my body, every cell is active and mind is never inactive. Action is therefore an unavoidable reality of our existence.”

The implication for a leader is profound and clarifying. Since action is constant, the critical choice is not whether to act, but how to act. The primary responsibility of a leader, therefore, is to bring deliberate awareness and clear intention to this continuous stream of actions. This shift in perspective moves the focus from anxiously deciding what to do next to consciously deciding how to be in every moment of doing.

If action is indeed inevitable, what quality of action leads to the most effective, ethical, and sustainable outcomes?

2. The Art of Selfless Action: Redefining Success with Nishkama Karma

The conventional model of leadership is often fueled by a deep-seated attachment to results. This attachment—to profit margins, to promotions, to praise, to a specific vision of success—is a primary source of anxiety, burnout, and compromised ethics. Nishkama Karma, or the principle of selfless action, presents a powerful alternative that redefines success and liberates the leader from this volatile cycle.

The principle of Nishkama Karma is the practice of performing one’s duty with absolute excellence while relinquishing attachment to the outcomes. This does not mean acting without self-interest, but rather acting without being psychologically enslaved to the outcomes of your actions. The focus shifts entirely from the “fruit” to the quality of the action itself. The core operational instruction is to act asakttah—to perform one’s duties with full commitment, but without attachment.

This approach is not a passive acceptance of fate; it is a strategic framework for peak performance and mental clarity.

| Outcome-Attached Leader | Process-Oriented Karma Yogi Leader |

| Focus: Primarily on personal gain, recognition, and specific results. | Focus: On the quality, integrity, and excellence of the action itself. |

| Emotional State: Experiences high anxiety, fear of failure, and emotional volatility tied to outcomes. | Emotional State: Maintains equanimity and mental clarity, leading to better decision-making under pressure. |

| Decision-Making: Prone to short-term thinking and ethical compromises to secure a desired result. | Decision-Making: Able to make objective, long-term strategic choices based on principles and duties. |

The organizational benefit of this approach is immense. When a leader demonstrates equanimity in the face of both success and failure—because they are detached from the “fruits”—they signal to the entire organization that the process of striving, learning, and acting with integrity is what is truly valued. This cultivates a culture of deep psychological safety. It removes the existential threat associated with failure and creates the conditions for genuine innovation, empowering teams to perform at their best without the paralyzing fear of being punished for an undesirable outcome.

This raises a deeper question: if the leader’s focus is on the action itself, not the results, then who is truly the agent of that action?

3. Deconstructing Agency: The Leader as a Conscious Instrument

A primary pitfall in leadership is the trap of the ego, or ahankara—the persistent feeling that “I am the doer.” This belief, while seemingly a source of strength, is actually a cause of great fragility and burnout. Understanding the true source of action is the key to developing profound humility and unshakeable resilience.

This philosophy does not advocate for “no action,” which is an impossibility. Instead, it guides the leader to realize their true nature as “actionless awareness”—a state of pure consciousness that witnesses action without being entangled by it. This true Self is the Atma. The actions themselves are carried out by prakriti—the dynamic field of nature and organizational life. The ego’s fundamental error is to claim authorship, to falsely believe, “I alone achieved this,” or “I alone am to blame for this failure.”

Think of the Self as the silent, unshakeable movie screen, while prakriti (the organizational dynamics, market forces, and team actions) is the movie being projected upon it. The ego’s mistake is believing it is the movie, experiencing every twist and turn as a personal crisis. The Karma Yogi learns to identify with the screen—aware, present, and unaffected.

This is not an abstract concept but a practical diagnostic tool, because prakriti operates through three constituent forces or gunas:

Sattva: The quality of clarity, balance, harmony, and purpose.Rajas: The quality of action, ambition, passion, and agitation.Tamas: The quality of inertia, obscurity, resistance, and confusion.

A leader who understands this framework can analyze challenges not in personal terms, but as an interplay of these forces within their team, their market, and themselves. This mindset shift offers powerful benefits:

- Reduced Burnout: By depersonalizing failures and successes, the leader avoids the emotional exhaustion that comes from carrying the entire weight of the organization. Setbacks are seen as systemic data, not personal indictments.

- Enhanced Collaboration: This mindset fosters a deep appreciation for the contributions of every team member and the complex interplay of the

gunas. Outcomes are understood as a collective result, dismantling the silos created by ego. - Greater Humility: It grounds the leader, preventing the arrogance that can accompany success and the despair that often follows setbacks. This humility makes them more approachable, open to feedback, and ultimately, more effective.

This internal understanding of agency is the foundation for maintaining external balance, regardless of the outcome.

4. The Leader as an Exemplar: The Duty of Setting the Standard (Lokasangraha)

A leader’s influence extends far beyond their direct reports or official duties. Every action, decision, and emotional response is observed, interpreted, and often emulated, creating the cultural blueprint for the entire organization. This immense power comes with a profound and non-negotiable responsibility.

The principle of lokasangraha teaches that a leader (shresthudu) has a sacred duty to act with impeccable integrity for the welfare and stability of the collective. The ancient wisdom states, “what a great person does, others follow.” A leader’s actions set the standard for what is acceptable, what is valued, and what is condemned within the culture. This is the essence of leading by example.

Imagine a company facing a significant crisis. One leader, driven by ahankara (ego) and Kama (the desire to protect their status), chooses a path of secrecy and blame. This action teaches the organization that self-preservation trumps integrity. Another leader, practicing Nishkama Karma, is detached from the personal outcome of the crisis. Having mastered Krodha(anger), they do not need to find a scapegoat. They choose radical transparency and public accountability, setting a powerful precedent that honesty, courage, and collective responsibility are the organization’s true north. The first leader weakens the system; the second strengthens it for generations to come.

This duty is absolute. Even a leader who may feel they have earned the right to be “above the rules” must adhere to the highest standards. Their every move is under a microscope, and any deviation is seen as permission for others to do the same. This responsibility is not a burden but a potent tool for shaping a healthy, ethical, and high-performing culture. To uphold these external standards, however, a leader must first win the critical battles within.

5. Mastering the Inner World: Conquering the Twin Obstacles of Desire and Anger

The most critical battleground for any leader is their own internal landscape. Brilliant strategies and talented teams can all be undone if a leader’s judgment is clouded by internal turmoil. To act with clarity and wisdom, a leader must first learn to master the powerful forces within their own mind.

Karma Yoga identifies two primary enemies of wise leadership, born from the agitated state of rajas guna: desire (Kama) and anger (Krodha). The source texts do not treat these lightly; they are described with grave intensity. Kama is mahasana (all-devouring) and mahapatma (a great sinner)—an insatiable fire that consumes clarity, hijacks purpose, and envelops wisdom like smoke covers a flame.

In a modern leadership context, these forces manifest in predictable ways:

- Desire (

Kama): This is the ravenous craving for a specific outcome, an attachment to status, or an unchecked need for recognition. It leads to biased decisions, favoritism, and a willingness to take unethical shortcuts to secure a coveted result. - Anger (

Krodha): This is the destructive frustration that erupts when desires are thwarted. It appears as impatience, the creation of a blame culture, and communication that erodes trust. Anger is the toxic byproduct of unfulfilled attachment.

To master these forces, the philosophy offers a clear operational schematic—an internal chain of command for self-regulation:

- The Self (

Atma) is supreme, the silent witness. - The Intellect (

buddhi) is its chief executive, capable of discernment. - The Mind is the restless manager, subordinate to the intellect.

- The Senses are field agents, reporting to the mind.

The undisciplined leader allows the senses and mind to run the show, reacting impulsively to every stimulus. The Karma Yogi leader uses their buddhi to establish clear command. They observe the rise of desire or anger not as a directive for action, but as data from the field. This pause allows the intellect to intervene, choosing a response aligned with duty and principle, not impulse.

This internal mastery is the final piece of the puzzle, enabling a leader to perform effective, selfless, and influential action in the world, free from the distortions of ego.

——————————————————————————–

Conclusion: Leading as Action in Awareness

The path of the Karma Yogi Leader is a transformative journey from reactive management to conscious stewardship. It is built on a series of profound yet practical principles: recognizing that action is inevitable, which places the focus on the quality of our engagement; understanding that true power lies in detachment from results, which frees us to act with clarity and courage; deconstructing the ego’s illusion of being the “doer,” which fosters humility and resilience; accepting the solemn duty to lead by example, which shapes an ethical culture; and finally, mastering the inner world, which is the ultimate foundation for wise leadership.

This framework is not a restrictive doctrine but a liberating path. It offers leaders a way to navigate complexity with a steady mind, to inspire teams with authentic integrity, and to build organizations that are not only successful but also sources of human flourishing. It is the art of leading as a form of conscious action in a state of profound awareness.