In a world obsessed with hustle culture, social-media validation, and endless striving, true fulfillment often feels just out of reach. What if the secret isn’t in acquiring new goals but in letting go of old attachments? What if suffering—rather than something to avoid—holds the key to meaning?

Drawing on the wisdom of Harvard’s Arthur C. Brooks, Jordan Peterson, and Holocaust survivor Viktor Frankl, this post explores a counterintuitive truth:

Happiness isn’t a destination. It’s the byproduct of a meaningful life.

The Trap of Endless Wanting: Arthur C. Brooks’ Reverse Bucket List

Arthur C. Brooks, Harvard professor and co-author of Build the Life You Want, discovered that achieving every milestone on his bucket list didn’t deliver lasting satisfaction. He describes reaching his 50s with career prestige, financial stability, and global recognition—yet feeling surprisingly empty.

Why?

Because the brain is engineered for survival, not sustained bliss. Every achievement quickly becomes the new baseline, fueling the “hedonic treadmill.”

Brooks proposes a simple antidote: the reverse bucket list.

Instead of writing down what you want next, you list the desires that are controlling you—especially those tied to ego, comparison, and status.

Then you cross them out.

His formula explains why this works:

Satisfaction = (What You Have) / (What You Want)

Reduce the denominator, and satisfaction increases.

How to Make One

- Sit quietly and list 5–10 ego-driven wants.

- Strike through each, saying: “This no longer owns me.”

- Refocus on Brooks’ four stable pillars: purpose, family, friendship, and meaningful work.

As Brooks puts it:

“Intention is fine, but attachment is bad.”

The Four False Idols: Modern Substitutes for Fulfillment

Brooks draws from St. Thomas Aquinas to identify four distractions that distort our pursuit of happiness: money, power, pleasure, and fame. They appear promising because they satisfy old evolutionary drives—but they ultimately leave us emptier.

| Idol | Core Drive | Pitfall | Detachment Tip |

|---|---|---|---|

| Money | Security | Endless comparison | Practice daily gratitude. |

| Power | Control | Resentment & fear | Empower others. |

| Pleasure | Escape | Short-lived highs | Pair pleasure with purpose. |

| Fame | Validation | External identity | Limit metrics & comparison. |

Identifying your dominant idol is the first step toward loosening its grip.

Jordan Peterson: Why Happiness Cannot Be the Goal

If Brooks offers gentle tools for detachment, Jordan Peterson offers stark realism:

“Life is suffering.”

In 12 Rules for Life, Peterson argues that happiness is too unstable to serve as a life’s aim. Pain lasts longer than pleasure, chaos is guaranteed, and the brain evolved to prioritize survival over joy.

What sustains us is not momentary happiness but responsibility.

Peterson’s philosophy condenses into six practical principles:

- Aim high at something noble.

- Take responsibility—begin with manageable order (“clean your room”).

- Confront suffering instead of avoiding it.

- Tell the truth, including uncomfortable truths about yourself.

- Serve others to escape self-absorption.

- Commit to the good, even when the world feels irrational.

A bridge to Frankl

Peterson’s core insight mirrors an older philosophical truth:

Suffering is universal—and meaning is what transforms it.

This sets the stage for Viktor Frankl.

Viktor Frankl: Meaning as Humanity’s Lifeline

Viktor Frankl, Jewish psychiatrist and Auschwitz survivor, developed logotherapy, a system built on the idea that humans are driven not by pleasure (Freud) or power (Adler), but by meaning.

In Man’s Search for Meaning, Frankl observes that those who endured the camps were often those who held onto a purpose—future work, a loved one, or a belief worth suffering for.

Frankl identifies three sources of meaning:

- Creation — contributing or building something.

- Experience — love, beauty, nature, art.

- Attitude — choosing your response to suffering.

One of his most influential tools is paradoxical intention, a technique where you intentionally exaggerate the fear or symptom causing anxiety.

By leaning into it, the fear loses its power.

Simplified Example

- Insomnia: attempting to stay awake rather than trying to force sleep, which removes pressure and allows sleep to return naturally.

Frankl also emphasizes dereflection (shifting attention away from the self) and Socratic dialogue (questioning your beliefs to uncover deeper meaning). These tools help people navigate grief, trauma, and existential despair with dignity and agency.

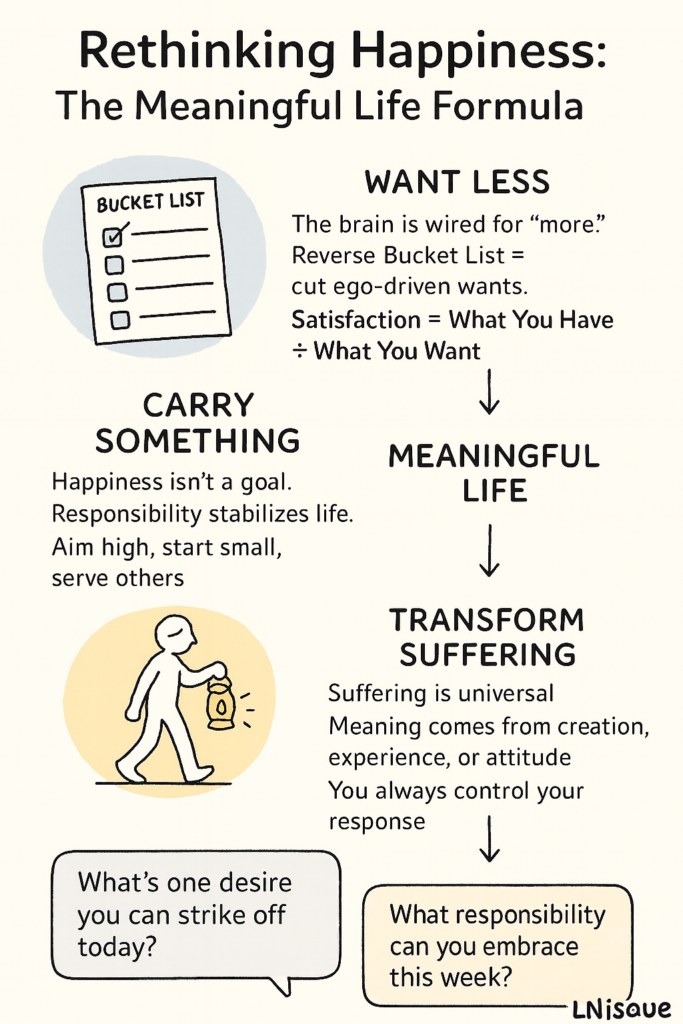

A Unified Path Forward

Together, Brooks, Peterson, and Frankl offer a three-step blueprint for a meaningful life:

1. Want less.

Detach from ego-driven desires (Brooks).

2. Carry something.

Take responsibility for something that matters (Peterson).

3. Transform suffering.

Choose your attitude and meaning (Frankl).

The world in 2025 may feel chaotic, but meaning is always available. Frankl survived unimaginable suffering by envisioning a future rooted in purpose. We cannot choose our circumstances, but we can always choose our response.

Your Turn: A Simple Reflection to Begin Today

Take a moment and answer:

1. What’s one desire you can strike off your reverse bucket list today?

2. What’s one responsibility you can embrace this week that moves you toward meaning?

Share your reflection below—your insight may help someone else begin their journey too.